From Amazon’s website

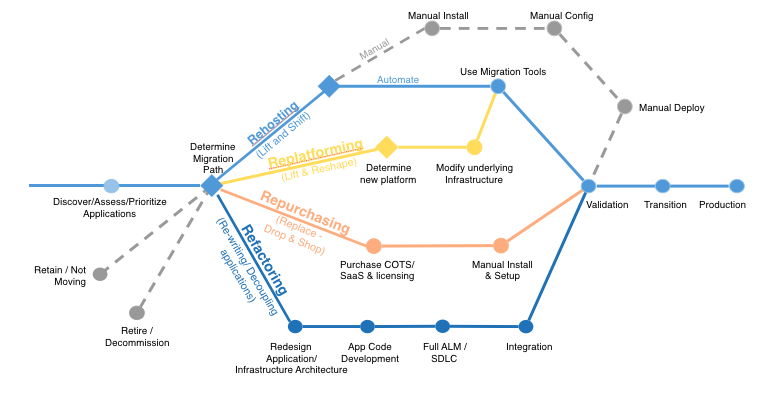

Here are possible strategies:

Let’s see it more in details…

- Rehosting, or lift-and-shift. Can you imagine some old 3 level application back from year 2000 that is still working unchanged? So no new things, no HA/DR concepts, no SOA/Web Services nor Microservices (see https://aws.amazon.com/microservices or Microservices – Wikipedia or What is a Microservices Architecture? | TIBCO Software), no virtualisation, no Infrastructure as a Service automation (see Infrastructure as a service – Wikipedia) concepts (Puppet, Jenkins). And if you have the whole OLTP, ETL, DataWarehouse IS, (so without any DataLake see https://aws.amazon.com/solutions/implementations/data-lake-solution or Data Lake – United Kingdom | IBM or Cloud Data Lakes for Dummies | Snowflake Data Warehousing) , NOSQL, BIG Data features) it is still possible to migrate it as-is, by simply creating the database and the applications servers, and transfering the data inside the Cloud and only then apply the new features that are already here (AutoScaling OLTP (see What is Amazon EC2 Auto Scaling? – Amazon EC2 Auto Scaling (amazonaws.cn)) maybe start to thing how to transform the Data Warehouse into Data Lake….). Of course, if you have VM instead of physical servers. it is even simpler than that, you need to simply transfer them inside the Cloud, with Amazon tools VM Import/Export (amazon.com) for exemple. Or it can be done manually, as show in the migration path. Of course, if the application has some or all of modern features mentionned, it can also be transfered inside the cloud as-is and start to benefit of new, Cloud, concepts (Egde locations, see What is an Edge Location in AWS | Edureka Community, DR between geographical data centers see What are data centers? How they work and how they are changing in size and scope | Network World, real time Data Lakes…) . As explained on Amazon’s website sometimes is more is to transfer the application and only then to make the architectural improvements.

- Replatforming, or lift and reshape. Once you have databases and applications servers inside a Cloud, maybe some things are not necessary anymore to be done by your teams, it can be done directly by the Cloud support team. For exemple, some databases can become just Database as a Service (see https://www.stratoscale.com/blog/dbaas/what-is-database-as-a-service/), so no need anymore to manage tablespaces, diskgroups ASM (Oracle feature…), to migrate to new versions, it can be done by the Cloud team. What is left to the former DBA team to do then? It is still necessary to tune applications, to deploy applications, to improve the design… Maybe some DBAs won’t appreciate the Database as a Service solution… But the inovating part of the job is still on the DBAs side. Other exemple, mentionned on the Amazon’s web site, is that replacing some licence cosly application server (Websphere/WebLogic) with some Apache server which is open source, can also be seen as the part of the Replatforming strategy.

- Repurchasing. Simply defined, replace the internal CRM product inside a Cloud, which then becomes a SaaS (Software as a Service) (see https://en.wikipedia.org/wiki/Software_as_a_service) , for exemple using Salesforce (which is a true CRM inside a Cloud)

- Refactoring / Re-architecting. Ok it’s time to explain what a full on-premise to cloud migration with complete changing of the architecture would mean. Imagine an old architecture: Oracle database for OLTP and the Data warehouse, Informatica as an ETL, Weblogic as the application server. The data is transfered by the nightly job into the Data Warehouse and is available on day +1 for being used by OLAP and BI teams. Of course the SOA Architecture can be present as well on the application server side . (For exemple, all application servers are accessed through Citrix etc ). If we migrate our application to the Cloud, and the Amazon RDS database (see https://aws.amazon.com/fr/rds/) (can be Postgresql, as an open-source for exemple) , we divide our SOA intro Micro-services architecture and finally all data is inserted at the same time in the OLTP Postgresql database and some Data Lake which holds the unstructured data as well (MongoDB) , and both OLTP and DataLake database are horizontally scalable, then the data become immediately available an can be analysed by some AI tools in real time! It is trully important for some applications, because sometims most of the value of data is lost if data is not exploited in near real time. Imagine a supermarket, when you want to send the promotional SMS to the customers while they are buying things, and the promotion is based on the products there are buying at this moment ….

- Retire or Retain. In the first case, you are decommissioning, so you are stopping the existing applications and dropping the databases not used anymore (maybe after archiving them on some long term storage, that, again, can be inside a Cloud 🙂 see https://aws.amazon.com/fr/s3/). In the second, you do nothing for the moment.

To be continued